DGX DL WS P3487 4A100/256GB 40G, REG A-INC

is a fast, multi-GPU workstation for deep learning and AI analytics. You can use the DGX Station to run neural networks, and deploy deep learning models. Because the DGX Station is software compatible with the NVIDIA DGX-1 server, you can also use the DGX Station to optimize applications to run on a production DGX-1 cluster.

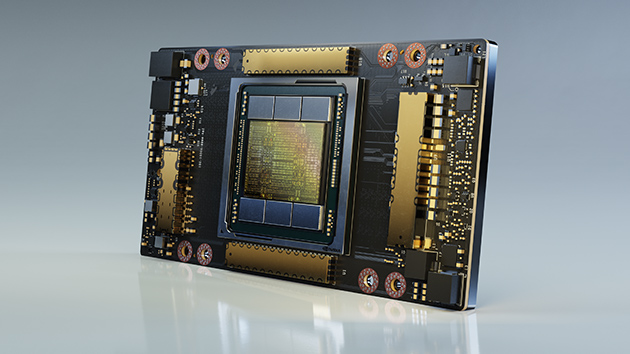

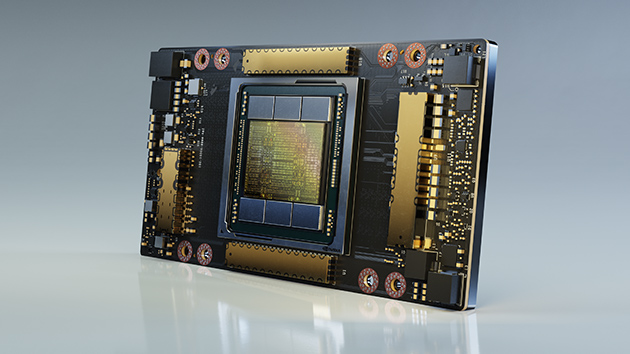

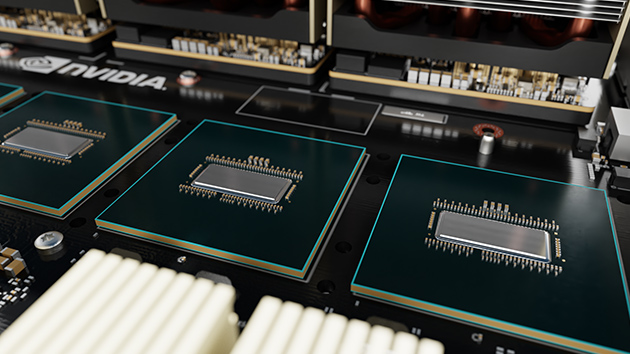

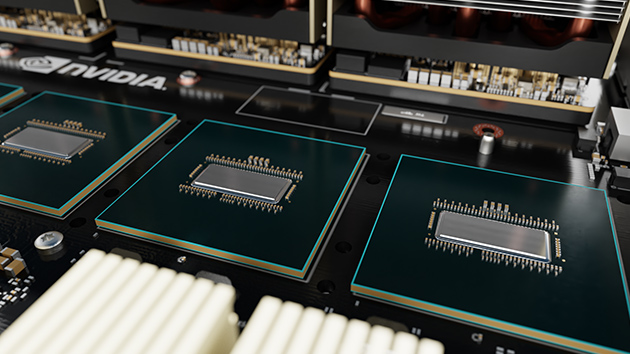

NVIDIA DGX Station™ A100 is the world’s fastest workstation for data science teams. With four

NVIDIA A100 Tensor Core GPUs, fully interconnected with NVIDIA® NVLink® architecture, DGX

Station A100 delivers 2.5 petaFLOPS of AI performance, bringing the power of a data center to

the convenience of your office

DGX Station A100 to produkt skierowany do wykorzystania w nauce i biznesie. Mowa tu o badaniach i inżynierii, gdzie potrzeba przetwarzania ogromnych ilości danych i posiłkowania się maszynowym uczeniem i sztuczną inteligencją. We wnętrzu złotej obudowy tego komputera znajdziemy 64-rdzeniowy procesor AMD z 512 GB pamięci RAM i 7,68 TB SSD NVMe. Zieloni chwalą się, że jest to jedyny serwer workgroup, który wspiera ich autorską technologię Multi-Instance GPU (MIG). Oznacza to, że DGX Station A100 jest w stanie zapewnić 28 oddzielnych instancji GPU z możliwością wykonywania równoległych zadań lub dla wielu użytkowników. Warto też dodać, że przepustowość pamięci to ponad 2 terabajty na sekundę.

DGX Station A100 Hardware Summary

| Processors | System Memory and Storage | Connecting and Powering on the DGX Station A100 |

| Single AMD 7742, 64 cores, and 2.25 GHz (base)–3.4 GHz (max boost). | 8 x64 GB System DDR4 RAM | Mini DisplayPort 1.2 to DisplayPort. USB keyboard USB mouse Ethernet cable |

| NVIDIA A100 with 80 GB (320GB total) or 40GB per GPU (160GB total) of GPU memory. | 7.68 TB Cache/Data U.2 NVME drive | |

| OS storage 1.92 TB Boot M.2 NVME drive |

| GPUs |

|

| GPU Memory |

|

| Performance |

|

| System Power Usage |

|

| CPU |

|

| System Memory |

|

| Storage |

|

| DGX Display Adapter |

|

| Network |

|

| System Acoustic |

|

| System Weight |

|

| System Dimensions |

|

| Operating Temperature Range |

|

| Software |

|

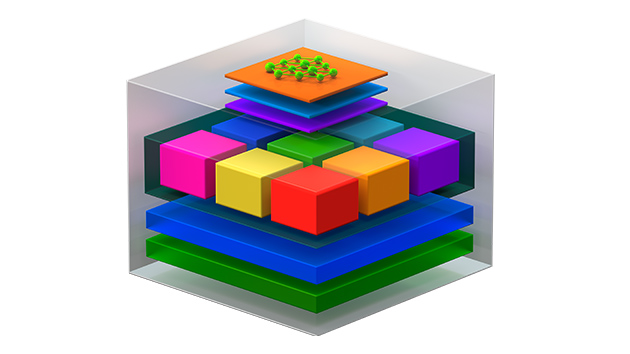

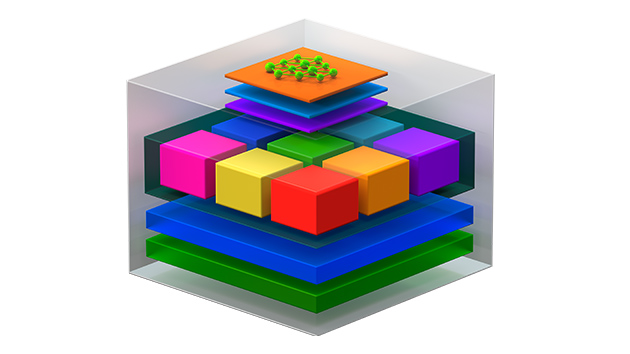

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration for AI, data analytics, and high-performance computing (HPC) to tackle the world’s toughest computing challenges. With third-generation NVIDIA Tensor Cores providing a huge performance boost, the A100 GPU can efficiently scale up to the thousands or, with Multi-Instance GPU, be allocated as seven smaller, dedicated instances to accelerate workloads of all sizes.

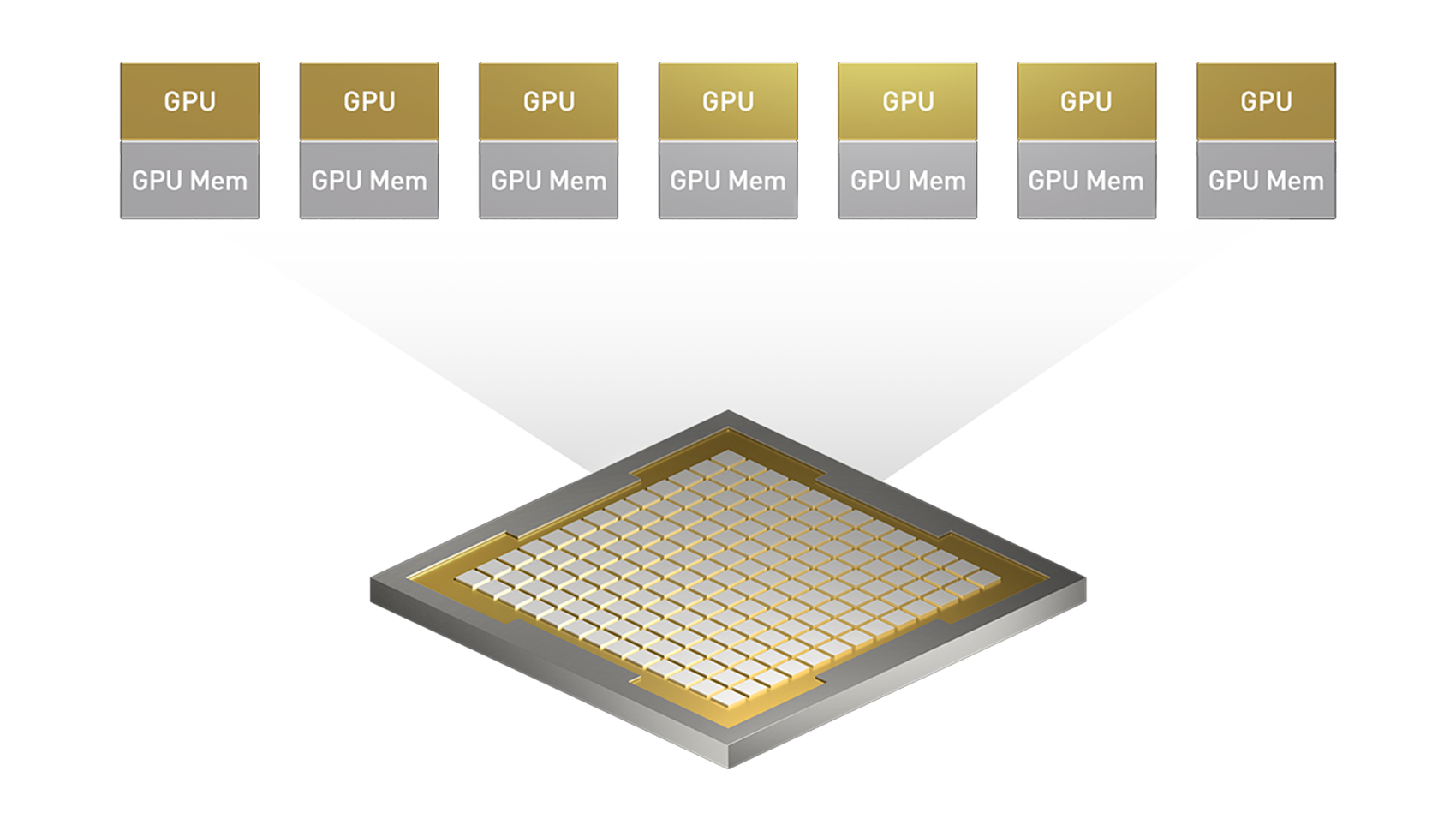

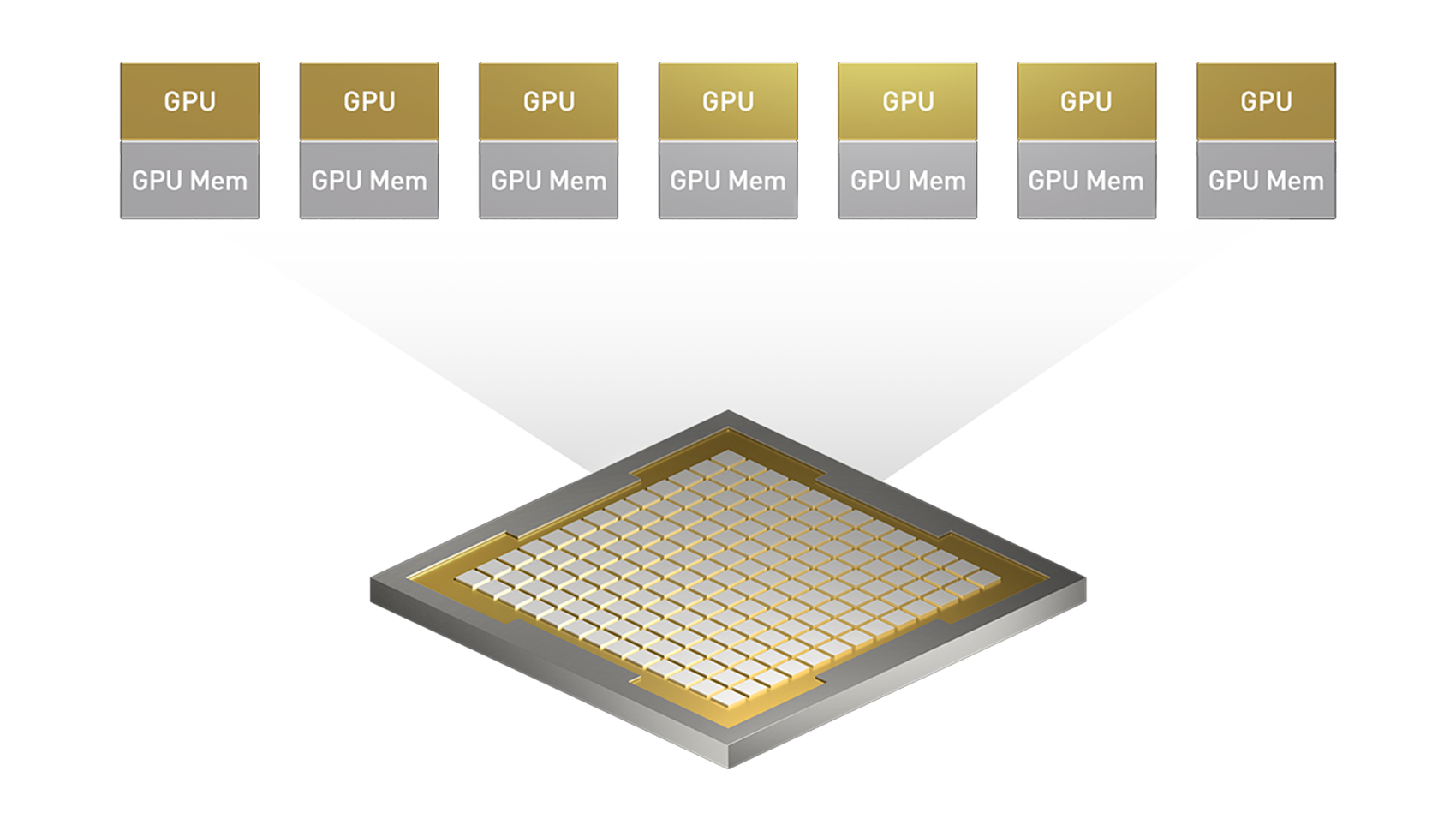

With MIG, the eight A100 GPUs in DGX A100 can be configured into as many as 56 GPU instances, each fully isolated with their own high-bandwidth memory, cache, and compute cores. This allows administrators to right-size GPUs with guaranteed quality of service (QoS) for multiple workloads.

A GPU can be partitioned into different-sized MIG instances. For example, in an NVIDIA A100 40GB, an administrator could create two instances with 20 gigabytes (GB) of memory each, three instances with 10GB each, or seven instances with 5GB each. Or a mix.

MIG instances can also be dynamically reconfigured, enabling administrators to shift GPU resources in response to changing user and business demands. For example, seven MIG instances can be used during the day for low-throughput inference and reconfigured to one large MIG instance at night for deep learning training.

With a dedicated set of hardware resources for compute, memory, and cache, each MIG instance delivers guaranteed QoS and fault isolation. That means that failure in an application running on one instance doesn’t impact applications running on other instances.

It also means that different instances can run different types of workloads—interactive model development, deep learning training, AI inference, or HPC applications. Since the instances run in parallel, the workloads also run in parallel—but separate and isolated—on the same physical GPU.

Without MIG, different jobs running on the same GPU, such as different AI inference requests, compete for the same resources. A job consuming larger memory bandwidth starves others, resulting in several jobs missing their latency targets. With MIG, jobs run simultaneously on different instances, each with dedicated resources for compute, memory, and memory bandwidth, resulting in predictable performance with QoS and maximum GPU utilization.

The third generation of NVIDIA® NVLink™ in DGX A100 doubles the GPU-to-GPU direct bandwidth to 600 gigabytes per second (GB/s), almost 10X higher than PCIe Gen4. DGX A100 also features next-generation NVIDIA NVSwitch™, which is 2X times faster than the previous generation.

The fourth generation of NVIDIA® NVLink® technology provides 1.5X higher bandwidth and improved scalability for multi-GPU system configurations. A single NVIDIA H100 Tensor Core GPU supports up to 18 NVLink connections for a total bandwidth of 900 gigabytes per second (GB/s)—over 7X the bandwidth of PCIe Gen5.

Servers like the NVIDIA DGX™ H100 take advantage of this technology to deliver greater scalability for ultrafast deep learning training.

DGX A100 features the NVIDIA ConnectX-7 InfiniBand and VPI (Infiniband or Ethernet) adapters, each running at 200 gigabits per second (Gb/s) to create a high-speed fabric for large-scale AI workloads. DGX A100 systems are also available with ConnectX-6 adapters.

DGX A100 integrates a tested and optimized DGX software stack, including an AI-tuned base operating system, all necessary system software, and GPU-accelerated applications, pre-trained models, and more from NGC™.

Enterprise Cloud Services

NGC offers a collection of cloud services, including NVIDIA NeMo LLM, BioNemo, and Riva Studio for natural language understanding (NLU), drug discovery, and speech AI solutions, and the NGC Private Registry for securely sharing proprietary AI software.

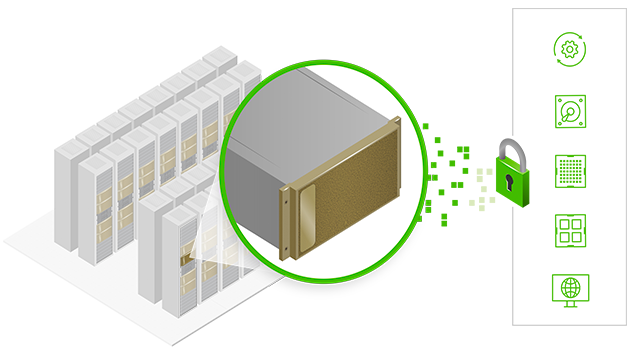

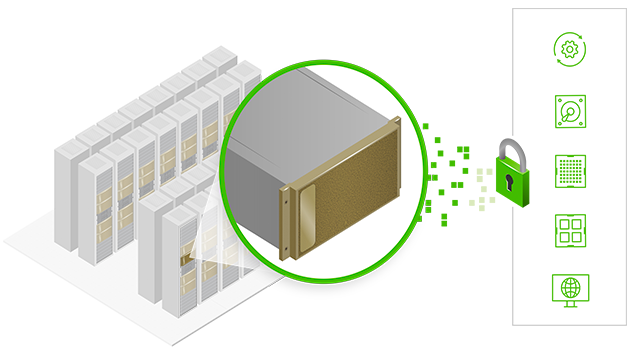

DGX A100 delivers the most robust security posture for AI deployments, with a multi-layered approach stretching across the baseboard management controller (BMC), CPU board, GPU board, self-encrypted drives, and secure boot.

| Rozmiar obudowy | Tower |

|---|---|

| ilość nodów | 1 |

| Procesor CPU | AMD EPYC™ |

| Producent Procesora | AMD |

| podstawka procesora | System On Chip |

| Chipset | SoC |

| ILOŚĆ PROCESORÓW | 1xCPU |

| ilość slotów pamięci | 8 DIMM slots |

| Typ pamięci | DDR4 DIMM |

| Standard pamięci | DDR4-3200 MHz |

| ilość GPU/HPC | 4 |

| interfejs SSD/HDD | PCIe 3.0 x4/x8 |

| rozmiar kieszeni hdd/ssd | 2.5" 15mm |

| kieszenie 2.5'' | 1 |

| złącza M.2 | 2 |

| moc zasilaczy | 1200W |

| ceryfikaty zasilaczy | 80 plus Platinum |

| redundancja zasilaczy | tak |

| Gwarancja | 1 rok |

Konfiguracja